Why Systems Fail Even When Politicians Try

P2.1.2 Engineering for Complexity: Failure Modes

From Diagnosis to Deeper Diagnosis

In Part 1, we diagnosed the anti-intellectual architecture of American politics. Three structural incentives—the soundbite imperative, the expertise paradox, and the precedent trap—create a system that actively punishes nuance. Politicians who acknowledge complexity lose to those who offer simple answers. The system selects for intellectual regression.

The government shutdown demonstrated these failures in real time. As Day 36 became Day 40, now reaching Day 43, millions of Americans confronted the consequences: 42 million SNAP recipients receiving half their food assistance, 58,600 children losing Head Start access, airports experiencing cascading failures from controller shortages. The political debate remained stubbornly simple—”Whose fault is it?”—while the operational reality grew increasingly complex.

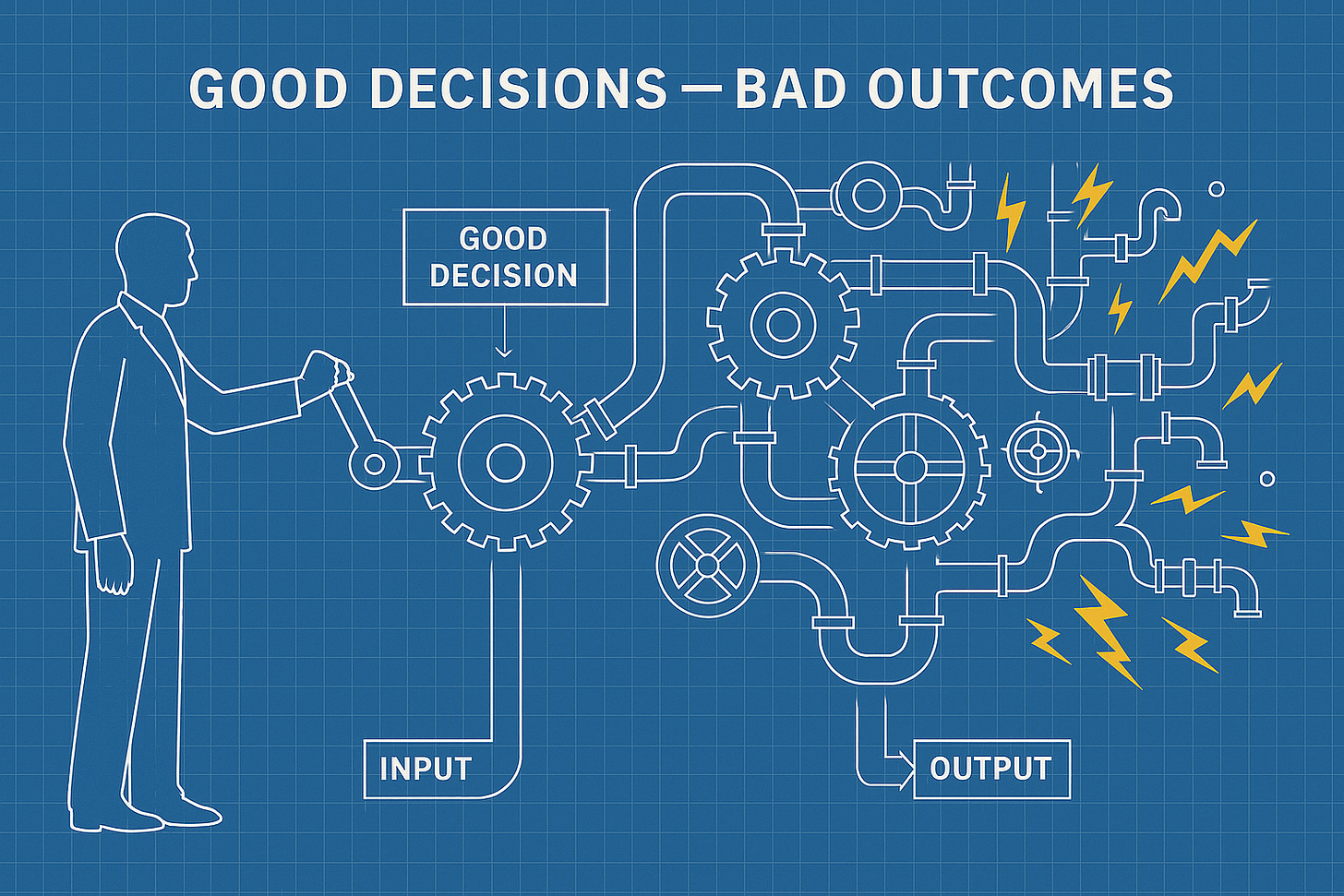

But the diagnosis raises a deeper question: Why does the current system fail even when politicians try?

The immediate response reveals our instinctive explanation: “It’s about greedy politicians. Elect better people and throw the bums out.”

Here’s the uncomfortable truth: This analysis assumes politicians are greedy. That’s not a bug in the argument—it’s the foundation. Politicians are rationally self-interested actors optimizing for re-election, career advancement, and post-office opportunities. That’s human nature. That’s always been true. That will always be true.

Some people find a $20 bill and keep it. Others turn it in. That variation exists. But systems design doesn’t depend on hoping everyone turns in the twenty. Good design assumes some people won’t—and builds accordingly.

The question isn’t whether politicians are greedy. The question is: What does the architecture incentivize them to do with that greed?

In this part, we’ll examine two catastrophic failure modes that emerge when the anti-intellectual architecture confronts genuine complexity: the novelty gap (inability to recognize genuinely new problems) and the technical debt cascade (how refusing complexity upfront creates worse complexity downstream).

But first, we must understand not just THAT the architecture fails, but HOW and WHY—so we can design systems that channel the same human nature toward better outcomes.

Designing for Human Nature, Not Around It

Before examining the specific failures, we need to establish a foundational principle: good systems design accounts for self-interested behavior, not around it.

The Engineering Standard

Consider your car’s engine. Engineers don’t design engines assuming perfect fuel, clean air, and ideal conditions. They design for reality: contaminated fuel, dirty air, extreme temperatures, driver error, irregular maintenance—and people acting in their own self-interest.

Your car calls for 91 octane premium fuel. But premium costs more. So someone—acting in their own rational self-interest—puts in 85 octane regular instead. Saves $10 per tank. Completely predictable self-interested behavior.

Does the engine immediately fail? No. Because it’s designed for this.

The knock sensor hears the engine starting to knock—pre-ignition from lower octane fuel. It sends a signal to the engine control unit. The ECU retards the ignition timing. The engine keeps running, not optimally but functionally. You lose some horsepower, maybe some fuel economy, but you don’t blow the engine.

The system compensates for self-interested behavior automatically. The engineer didn’t design hoping everyone would use premium fuel. The engineer designed knowing some people wouldn’t—and built a system that works anyway.

That’s why modern engines have oxygen sensors, knock sensors, mass airflow sensors, coolant temperature sensors. The O2 sensor constantly monitors the exhaust—when it detects the mixture running lean, it signals the computer to adjust fuel injection. The knock sensor prevents damage from cheap fuel. The mass airflow sensor compensates for dirty air filters.

These sensors aren’t there because engineers are pessimistic. They’re there because good design accounts for self-interested behavior and imperfect conditions. The engine doesn’t require perfect fuel or perfect behavior. It compensates automatically. That’s sophisticated engineering.

The same principle applies to governance. We don’t design systems assuming perfect politicians. We design for reality: ambitious people seeking power, interest groups seeking advantage, voters with limited attention, incomplete information, time constraints, and yes—greed.

The question is whether the system channels that self-interest toward good outcomes or bad ones.

What Current Architecture Incentivizes

The current system doesn’t prevent greed. It channels greed toward specific behaviors.

Want to get re-elected? (self-interest) → Offer soundbites, not solutions → Complex policy creates attack ad vulnerability → Simple promises win primaries

Want to avoid primary challenges? (self-preservation) → Project false certainty → Never say “I don’t know” → Never acknowledge trade-offs → Nuance reads as weakness to primary voters

Want lucrative lobbying job after office? (future income) → Serve donor interests → Depend on lobby-provided research → Maintain relationships that will pay off later

Want to survive in the system? (career preservation) → Cite precedent for political cover → Apply old solutions to novel problems → Never admit a problem is genuinely new

These aren’t moral failures. They’re rational responses to structural incentives. A legislator who ignores these incentives—who offers nuanced policy, admits uncertainty, proposes novel solutions—loses elections. The system selects them out.

The greed is constant. The architecture determines where it flows.

The Menendez Principle: Individual vs. Systemic Corruption

Yes, Senator Bob Menendez took gold bars as bribes. He got caught. He was prosecuted. He was convicted. The system worked—eventually.

That’s individual corruption. It’s illegal. We have laws against it. We can prosecute it.

But notice what the current system cannot detect or punish:

Legislators who vote exactly as lobbies want because they’re dependent on lobby-provided research (legal, rational, systematic)

Legislators who reject nuanced policy because it creates soundbite vulnerability (legal, rational, systematic)

Legislators who apply wrong solutions because precedent provides political cover (legal, rational, systematic)

Legislators who compress complex problems into false binaries because that’s what the format demands (legal, rational, systematic)

These aren’t corrupt. They’re rational responses to perverse incentives. And that’s more dangerous than individual corruption because it’s not prosecutable. It’s just how the system works.

Individual corruption is rare and prosecutable. Systemic dysfunction is pervasive and legal. The latter is far more dangerous because it affects every decision, every day, on every issue.

The same self-interest, channeled differently, produces different outcomes. But first, we must understand the two ways this architecture fails when confronting complexity.

The Novelty Gap: When Analogous Reasoning Catastrophically Fails

The anti-intellectual architecture’s most dangerous feature isn’t that it makes thinking difficult—it’s that it makes recognizing novelty impossible. The system cannot distinguish between “old problem requiring new thinking” and “genuinely new problem requiring new frameworks.” Every problem gets forced into existing categories. Every solution cites precedent. Every framework is borrowed from the past.

When problems are genuinely novel, this produces catastrophic failure.

AI Regulation: The Unprecedented Challenge

Artificial intelligence has no historical precedent. It’s not like cars—vehicles that move people. It’s not like phones—devices that enable communication. It’s not like the internet—a network that connects computers. AI is a fundamentally different category of technology: systems that can perform cognitive tasks, generate novel content, make autonomous decisions, and potentially exceed human capability in specific domains.

The political system, optimized for precedent citation and soundbite communication, defaults to binary extremes:

“Total ban”—outlaw the development of AI systems (impossible and counterproductive) “Zero regulation”—let the technology develop without any oversight (dangerous and irresponsible)

Both are wrong because AI isn’t one thing. It’s a technology category like “chemistry.” Different applications have radically different risk profiles. Using AI to recommend movies on Netflix differs fundamentally from using AI to make medical diagnoses, autonomous weapons targeting decisions, or judicial sentencing recommendations.

Reasoning by analogy fails completely. “Regulate it like social media” misses that AI systems have fundamentally different capabilities. “Regulate it like medical devices” misses that AI applications span far beyond healthcare. “Regulate it like nuclear technology” misses that AI development is globally distributed and largely software-based.

What nuanced AI policy actually requires is novel:

Compute allocation frameworks (who gets access to the massive computational resources required for frontier AI training?)

Intellectual property regimes (who owns AI-generated content? How do we handle AI training on copyrighted material?)

Safety guardrails for high-risk applications (what standards apply to AI systems making medical, legal, or military decisions?)

Liability frameworks (who’s responsible when AI systems produce harmful outputs—the developer, the deployer, the user?)

Export controls (how do we prevent adversaries from accessing strategically significant AI capabilities?)

Transparency requirements (what disclosure is needed for AI decision-making in sensitive domains?)

Red-teaming and testing standards (how do we evaluate whether AI systems are safe before deployment?)

These are novel policy levers. No historical precedent exists for regulating a technology that can reason, generate, and decide. Analogous reasoning produces incoherent policy because the analogy always fails at the critical dimension. The current architecture cannot process this novelty because it’s optimized for precedent citation—it demands that new problems fit old categories.

Climate Policy: The Novel Combination Problem

Climate change presents a different form of novelty: not a single unprecedented technology, but an unprecedented combination of challenges requiring an unprecedented combination of tools.

The precedent trap forces simplification:

“It’s like 1970s environmental regulation” → Apply cap-and-trade mechanisms only “It’s like industrial policy” → Subsidize clean energy only “It’s like a wartime emergency” → Command-and-control regulation only

Each analogy captures one dimension while missing the rest. Climate requires a novel combination that no historical precedent provides:

Market mechanisms (carbon pricing to internalize externalities—basic economics, but politically toxic)

Industrial policy (strategic subsidies to accelerate clean technology deployment—effective but requires picking winners)

R&D investment (developing breakthrough technologies for problems we can’t solve with current tech—high-risk, long-timeline)

Adaptation infrastructure (building resilience for impacts we can’t prevent—expensive, unglamorous, essential)

International coordination (global problem requiring global solution—difficult when nations have conflicting interests)

No historical precedent effectively combines all these tools. Each tool has a political constituency that wants ONLY that tool. Market advocates reject subsidies. Industrial policy advocates reject market mechanisms. Each side claims their preferred approach is sufficient.

The sophistication required—coordinating market signals with strategic investment, across multiple decades, with international cooperation, while building adaptation capacity—breaks the soundbite imperative completely. You cannot explain this in ten seconds. You cannot compress it into “market solution” versus “government solution.” You cannot reason by analogy to past environmental challenges because the scale, timeline, and coordination requirements are unprecedented.

The analogous reasoning produces suboptimal compromises that satisfy no one because the problem genuinely requires novel frameworks that don’t fit existing categories.

Digital Services: When Fundamental Assumptions Break

The tax code was designed for physical goods: tangible objects that exist in specific locations, get manufactured in factories, transported in trucks, and sold in stores. Every assumption about taxation—where value is created, where transactions occur, how to allocate revenue across jurisdictions—depends on physicality.

Digital services break every assumption.

Where is software “located”? The code might be written in California, compiled on servers in Oregon, distributed from data centers in Virginia, and accessed by users in Ohio. Which jurisdiction gets to tax what?

Is software a product or a service? If I buy it once, it’s a product. If I subscribe monthly, it’s a service. But what if I subscribe to access that gets continuously updated? Is that a product that changes or a service that persists?

How do you tax cross-border digital transactions? If a French user accesses a U.S.-based platform that serves ads from a British company to fund content created by a Canadian, who owes tax to whom?

What about user-generated value? Social media platforms don’t create most of their content—users do. How do you value and tax the platform’s contribution versus the users’ contribution? How do you handle network effects where value increases with each additional user?

Analogous reasoning fails at every step:

“Tax it like books” → But books are physical objects with clear location and transaction points “Tax it like legal services” → But legal services are bespoke interactions, not scalable digital products “Don’t tax it” → But then physical goods companies face competitive disadvantage against digital alternatives

The only coherent approach is first principles: What are we trying to accomplish with taxation?

Revenue generation (fund government services)

Behavior modification (incentivize/disincentivize activities)

Equity and fairness (progressive distribution of burden)

Design tax policy from those principles, not from “what did we do before?” But the current system cannot engage in first-principles thinking because it’s optimized for precedent citation. Novel problems get forced into old categories even when the fit is obviously wrong.

The Pattern

Across AI regulation, climate policy, and digital taxation, the same failure emerges: the architecture cannot recognize genuine novelty. Novel problems require novel frameworks. Reasoning by analogy produces category errors. First-principles thinking gets punished as academic or impractical. The system forces everything into existing precedents because that’s what the incentive structure rewards.

When genuinely new problems arise—and they arise constantly in a rapidly changing world—the architecture fails catastrophically. Not because the problems are unsolvable, but because the system cannot process them.

The Technical Debt Cascade: How Refusing Complexity Creates Worse Complexity

The architecture’s second major failure mode is subtler but equally destructive: refusing to acknowledge complexity upfront doesn’t eliminate complexity—it just pushes complexity downstream into implementation, where it accumulates as technical debt until systems become unmaintainable.

Simplified Promises Create Convoluted Implementation

Consider a simple political promise: “No one making under $400,000 pays more taxes.”

Clean. Clear. Fits in a soundbite. Wins votes.

The implementation reality: hundreds of pages of tax code addressing questions the promise ignores:

What counts as “income”? Wages clearly do. But what about capital gains? Business income? Dividends? Interest? Rental income? Royalties? Carried interest from private equity? Stock options exercised? Restricted stock units vesting? Crypto gains realized?

What about phase-outs? If the threshold is exactly $400,000, does someone making $399,999 pay nothing more while someone making $400,001 pays significantly more? That creates a massive incentive to manipulate income to stay just below the threshold. So you need phase-outs. But over what range? How fast?

What about credits, deductions, and exemptions? Does “no one under $400K pays more taxes” mean the rate doesn’t increase, or the total bill doesn’t increase? If someone currently pays $50,000 through creative deductions, can they still do that? If not, their bill increases even though their rate didn’t.

How do you prevent gaming? What about pass-through entities that let high-earners structure income as business profits? What about trusts that distribute income to multiple beneficiaries? What about corporate structures that defer compensation? Each gaming strategy requires a countermeasure. Each countermeasure adds pages to the code.

The simple promise doesn’t eliminate this complexity. It just refuses to acknowledge it during the campaign. Then it explodes during implementation into convoluted law that no one understands—not the legislators who voted for it, not the taxpayers trying to comply with it, not even the accountants paid to interpret it.

The technical debt accumulates. The system becomes unmaintainable. Future reforms must work around the accumulated cruft. Eventually, the code becomes so byzantine that even experts can’t predict outcomes with confidence.

This same pattern repeats everywhere:

The Affordable Care Act promised to make healthcare simple and affordable. It produced 900+ pages of legislation that created exchanges, subsidies, mandates, penalties, exemptions, and interacting requirements so complex that even insurance companies struggled to implement them correctly.

No Child Left Behind promised straightforward accountability for schools. It produced teaching-to-the-test, statistical gaming, and perverse incentives that undermined actual education while creating massive compliance burdens.

The Bipartisan Infrastructure Bill promised straightforward spending on roads and bridges. It produced Byzantine allocation formulas distributing funds across dozens of programs with conflicting requirements and overlapping jurisdictions.

The pattern is universal: Political systems that reward simple promises create implementation nightmares. Refusing complexity upfront (admitting that tax policy, healthcare, education, and infrastructure are genuinely complicated) doesn’t eliminate complexity. It just ensures complexity emerges in the worst possible form—as accumulated technical debt that makes systems progressively less functional.

Unintended Consequences Compound Over Time

When we pretend solutions are simple, we ignore feedback loops and second-order effects. The result isn’t just implementation complexity—it’s cascading unintended consequences that require further interventions, each adding more technical debt.

Rent control provides the clearest example. The simple promise: “Cap rents to make housing affordable.”

The immediate effect seems positive—existing renters pay less. But the second-order effects accumulate.

It reduces new construction—why build if you can’t charge market rates to recover costs? It reduces maintenance—why invest in improvements if you can’t raise rents to capture value? It creates misallocation—people stay in rent-controlled apartments they’ve outgrown because moving means losing the controlled rent. It benefits current renters at the expense of future renters—the fixed supply gets allocated to whoever got there first. Each problem requires additional interventions—new construction mandates, maintenance requirements, lottery systems for allocation.[1]

Each intervention creates its own unintended consequences requiring further interventions. The technical debt compounds. The system becomes increasingly dysfunctional despite—or because of—the accumulation of well-intentioned rules.

Note carefully: this isn’t an argument against rent control per se. It’s an argument that pretending rent control is a simple solution creates worse implementation. A nuanced approach would acknowledge the trade-offs upfront: rent control provides immediate relief to current renters but reduces supply over time, so it must be paired with policies that incentivize new construction and maintain existing stock. That’s complicated. It doesn’t fit in a soundbite. So the political system rewards the simple version, and we get the accumulated dysfunction.

The same pattern appears in minimum wage policy. The simple promise: “Raise the floor to help workers.”

The complex reality: employment effects vary by region (urban vs. rural), by industry (service vs. retail vs. manufacturing), by business size (chains vs. small businesses), and by local cost of living. A $15 minimum wage in San Francisco differs fundamentally from a $15 minimum wage in rural Mississippi.

Pretending this is simple produces one-size-fits-all federal policy that works poorly everywhere. Some regions need higher minimums, some need lower. Some industries can absorb increases easily, others face genuine constraints. But acknowledging this complexity makes you vulnerable to the soundbite: “So you think workers in Mississippi deserve less than workers in California?”

The technically correct answer—”No, I think the same real wage requires different nominal wages in different cost-of-living environments, and optimal policy requires regional variation”—is politically fatal. So we get crude approximations that create unintended consequences requiring further interventions.

The COVID-19 stimulus demonstrates the same failure at massive scale. In Part 1, we showed how the precedent trap led policymakers to apply the 2008 demand-side playbook to a supply-side crisis. We knew they were different types of crises. Undergraduate macroeconomics distinguishes demand shocks (people can’t or won’t buy) from supply shocks (goods and services can’t be produced or delivered).

But the soundbite imperative and precedent trap forced application of the last solution to the current problem. The result: significant inflation as demand stimulus hit supply constraints. The technical debt accumulates. Now we face interest rate increases to combat inflation, which creates housing affordability problems, which require new interventions, each adding more complexity.

The lesson is universal: Complexity doesn’t disappear when we ignore it—it just becomes unmanageable. Technical debt accumulates. Systems degrade. Eventually, cascading failures become inevitable.

The government shutdown that opened Part 1 represents the endpoint of this process: so much accumulated technical debt—expired authorities, depleted contingency funds, interacting policy requirements, overlapping jurisdictions—that the system cannot process even routine operations without failure. SNAP benefits got cut in half not because anyone voted to cut them, but because the technical debt embedded in the system made that outcome unavoidable once funding lapsed.

The Endpoint of Accumulated Failure

We’ve diagnosed two catastrophic failure modes that emerge when the anti-intellectual architecture confronts genuine complexity:

The Novelty Gap: The system cannot recognize genuinely new problems. It forces novel challenges into old categories (AI becomes “like social media,” climate becomes “like 1970s regulation,” digital services become “like books”). Analogous reasoning produces category errors. First-principles thinking gets punished as academic. When problems are genuinely unprecedented, the architecture fails completely.

The Technical Debt Cascade: Refusing to acknowledge complexity upfront doesn’t eliminate complexity—it pushes it downstream into implementation where it accumulates as unmaintainable cruft. Simple promises create convoluted laws. Unintended consequences compound over time. Each intervention requires further interventions. Systems become progressively less functional until cascading failures become inevitable.

Both failure modes share a common assumption: that simplicity is a virtue and complexity is a cost to be minimized.

But what if the opposite is true?

What if high-performing systems in every domain—engineering, medicine, aviation, monetary policy—have evolved toward greater sophistication over time, not because complexity is preferred, but because reality requires it?

What if the political system’s aversion to complexity isn’t just a flaw—it’s the fundamental reason governance fails while other domains succeed?

The government shutdown demonstrates the endpoint: Day 43 of operational failure, not because anyone wanted it, but because the accumulated technical debt made it unavoidable. SNAP benefits cut in half. Children losing Head Start access. Airports approaching failure. These aren’t political consequences—they’re system failures that occur when architecture cannot accommodate complexity.

The failures are diagnosed. The question is whether we have the courage to acknowledge what the evidence demands.

In Part 3, we’ll examine the sophistication imperative: why every high-performing system evolves toward complexity, why governance must do the same, and why the knowledge we need already exists. Not theoretical possibilities—concrete examples from domains that work.

The diagnosis is complete. The question is whether we’ll learn from it.

This is Part 2 of a four-part series on the anti-intellectual architecture of American politics. Part 1 diagnosed the structural incentives that punish nuance. Part 2 examines the two catastrophic failure modes when this architecture confronts complexity. Part 3 will show why sophistication is inescapable for effective governance. Part 4 will provide the engineering solutions.

Endnotes

[1] See Diamond, Rebecca, Tim McQuade, and Franklin Qian, “The Effects of Rent Control Expansion on Tenants, Landlords, and Inequality: Evidence from San Francisco,” American Economic Review 109(9): 3365-3394 (2019). Also see Glaeser, Edward L., “Triumph of the City” (2011) and Arnott, Richard, “Time for Revisionism on Rent Control?” Journal of Economic Perspectives 9(1): 99-120 (1995) for comprehensive reviews of rent control literature demonstrating these standard effects.